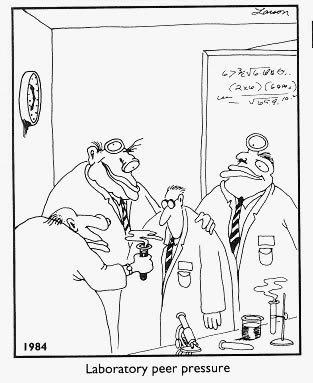

Just what IS ‘peer-review’, exactly?

Of all of the research-related activities I’ve done, short of actual research, peer-review is the activity I do the most, and the one that I’ve been doing the longest. I regularly review for three publications (i.e. I’m in their rota somewhere), and get sporadic requests from others (usually because I’m one of the references.) My perspective on peer-review differs from most. It’s not to say that I’m against it or that I have a better idea; but that as with all processes in science, the imperfections are worth knowing because the devil lies in those details.

So what exactly, is “peer review”? What does it mean when you publish a manuscript in a “peer-reviewed journal”?

The British Medical Journal lays out their process quite explicitly. I include the link in case you’re that curious. If you don’t want to read it, you won’t miss too much.

When a manuscript is submitted to a journal, it is reviewed first by an editor. For small journals, this can be done by the editor-in-chief. As the journal’s scope increases, there’s too much volume of submissions to be screened by the editor-in-chief, so most journals will have section editors. The job of the editor is to determine whether the manuscript fits in the scope of the journal and whether the article will be of interest to the readership.

Most journals are the ‘mouthpiece’ of an organization. The Journal of Strength and Conditioning belongs to the NSCA. Medicine and Science in Sport and Exercise belongs to the ACSM. The British Medical Journal and the “BMJ” (which is a whole host of medical journals) are part of the British Medical Association. JAMA is the journal of the American Medical Association. Journals that are exceptions to this rule would be ones like the New England Journal of Medicine (which is technically part of the Massachusetts Medical Society) and the Lancet (which has no affiliation with any medical or scientific organization). Scope, therefore, is determined largely by the membership of the affiliated organization, who are the journal’s primary readers. You obviously don’t send a study on foot surgery to the journal of hand surgery. From there, the lines can blur. A human study on glucose control through diet could be sent to a nutrition journal, a diabetes journal, a physiology journal, an endocrinology journal, or to a general medical journal. You probably couldn’t send it to a surgery journal, or a pediatric journal (if it only involved adults), or a molecular biology journal.

However, to become the editor-in-chief, or even an editor at all of a scienfic journal, you generally have to have published and have done research work in the arena of that journal. So, from the first read-through, you’ll have gotten some sort of review from a person who would be considered your “peer” in the sense that it would be someone who works (at least broadly) in your area.

Once a manuscript has passed the initial screen, it then goes through the process that has become known as the “peer review”, which is basically “external review”. The standard review is two external reviewers. Some journals use more than 2; but even the BMJ uses two external reviewers. Reviewers are asked to make comments and then, for most journals, to make a recommendation on the manuscript. Usually, the options are: 1) Accept with minor revisions; 2) Accept with major revisions, 3) Reject.

Minor revisions are things like spelling mistakes, minor organizational issues (e.g. putting results in the methods section), expansion on unclear portions. Major revisions usually require further data analysis, or overhauls of entire sections of the paper. Either way, the important part of the decision is “Accept”.

If both peer reviewers agree to Accept, it’s rare for the editor to go against the reviewers. If both peer reviewers reject, it’s also rare for the editor to against the reviewers. When there’s disagreement, the editor usually has to step in and make the final decision, whether that’s in an editorial committee meeting, or on their own.

As the author, you don’t see any decisions until the editor has made the final decision. You generally get one letter/email with either some form of “Accept” or “Reject” followed by the peer-reviewers’ comments. The peer reviewers’ job is not usually to be nice, but to cut to the chase of the ways in which the manuscript could be improved. If your manuscript is accepted, you have to satisfy all of the points of feedback on both peer-reviews (if they are in conflict, you generally pick one and justify why you didn’t do that other) before your manuscript will be published. There isn’t generally much discussion and you definitely do not chat it up with the reviewers. Rebuttal on some points is fine (made to the editor) but you have to be prepared to lose the argument too. Depending on the journal, your revised version goes back to the same peer-reviewers to make sure you adequately addressed their concerns.

Reviews come in two main flavours: Blinded and unblinded. A true blinded review means that neither the authors nor the reviewers have access to each others’ identity (which includes striking out laboratory names, locations, etc.) Unblinded reviews come in different varieties. Most often, the reviewer is not blind to the author, but the author is blind to the reviewer. Some journals leave everyone unblinded. There are pro’s and con’s to all of these combinations. Reviewers are also expected to declare conflicts of interest. The degree to which this is accomplished is highly variable. Conflicts exist where a reviewer might be developing a product that is used in the study to where a reviewer might be doing similar research in the same vein that either hasn’t gone to publication yet or is contrary to the manuscript.

So, topically, this sounds like a pretty good system. Knowledgeable-in-their-field, hopefully unbiased (or minimally biased) reviewers ensuring the quality of literature being published and consumed by decision-makers (whether professional or not) is pretty good.

The main problem with the existing peer-review system (and I’m not saying anything that hasn’t been said before; even if you’re reading it here for the first time) is that the peer-review process for any journal is only as strong as its weakest reviewer. Most of the time, when I get asked to review, it’s as a methods review or a statistics review. My review style is not dissimilar to what I write on this blog (though in point form and usually longer). My editors-in-chief (for the three publications I review regularly) took me on because I’m a methodologist. I don’t usually get the simple studies. But ultimately, their decisions to take me on (for better or for worse) were because they felt they needed the expertise in the reviewer pool.

However, I would argue that most journals do not have many methodologists or statisticians in their reviewer pool. Some journals probably don’t need these types of reviewers: A mouse study is already at least two steps removed from being generalizable to human decisions. A cell study, even further. But lack of methodological rigour in human studies that CAN affect management decisions can have wide-reaching implications; and many of the exercise physiology/human physiology researchers don’t collaborate with study designers because the template of “N=10-20 is generally enough” has been passed down through generations of researchers before them.

In an ideal world, I would love for researchers to involve a methodologist up front in the design phase of a project. It’s not dissimilar to asking the reconstructive surgeon to see a patient before the ablative one takes out a cancer so that reconstructive options don’t get accidentally kyboshed. It’s frustrating to receive a complete dataset that ultimately can’t answer the original question–a situation that could have been prevented if the statistical analysis wasn’t an afterthought in the protocol. The reason why protocols are pubished in the Cochrane Collaboration is because a) it lets people know that the project is under way to avoid work duplication and b) it opens the floor for discussion and problem identification that might be addressed before it’s too late.

In short, peer-review is only as good as the reviewers. Certainly _some_ review is better than no review at all, which is why peer-reviewed papers are generally more robust than publiciations that are NOT peer-reviewed. But I think there is still considerable variation within the category of “peer-reviewed” that should not be ignored. Peer-review is not an automatic, blind stamp of quality approval. The research still has to stand on its own.