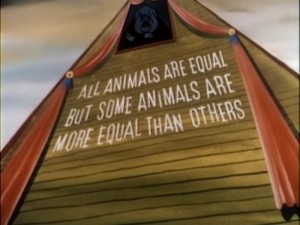

Animal Farm Evidence: Some studies are not more equal than others.

Something interesting happened today. A social media post went out about a study showing an association between strength training in individuals older than 65 years of age and all-cause mortality.

Something interesting happened today. A social media post went out about a study showing an association between strength training in individuals older than 65 years of age and all-cause mortality.

This, of course, was re-posted and praised in the echoes that followed because nothing feels quite so good as being “right” about your choices.

I don’t have a problem with this per se, except that this is a great example of how personal preferences and (dare I use the term) bias come into play in the pseudo-practice of evidence-based practice.

I am not often asked (I’m not that popular), but when I am, a question along the lines of, “For those of us who are not experts in statistics or research methods, how can WE practice in an evidence-based way?” is often posed. And while I think that the answer is that it is largely on you to start to cultivate that kind of knowledge (just like you cultivated your practice-knowledge), there are a few things that don’t necessarily require graduate-level knowledge that are integral to evidence-based practice.

At its core, evidence-based practice is a skill that requires consistent application of an analytic approach to a practice question or problem. In this application, we also learn to identify our own biases that fly in the face of research evidence and to reconcile them either by explaining why we have chosen to ignore a study, or why we have chosen to change our opinion about the solution to the problem.

I’m going to quickly go through this study to point out its major features because I think it’s a useful lesson on how easy it is to become complacent about our own preconceived ideas and how we choose to emphasize certain things and not others.

Kraschnewski JL, Sciamanna CN, Poter JM et al. Is strength training associated with mortality benefits? A 15-year cohort study of US older adults. Preventive Medicine doi: 10.1016/y.ypmed.2016/02/038, 2016. (ahead of print)

Methods

This cohort design study dataset was taken from the National Health Interview Survey (NHIS) from 1997-1999 and then linked to death certificate data up to 2011. The NHIS is a survey administered by the US Census Bureau to a sample of the US population by state. This study only included those respondents of the NHIS who were 65 years old or older.

The main variable of interest was the answer to the question, “How often do you do leisure-time physical activities specifically designed to strengthen your muscles, such as lifting weight or doing calisthenics?” Respondents were asked to provide both the number of times as well as the unit of time over which that number spanned (e.g. “per week”, “per month”.) They then categorized these respondents into those who met the minimum ACSM guideline of twice per week.

Additional variables collected from the survey were questions related to intensity (roughly, if they sweated, or breathed harder), and time performing these activities. The respondents were then further categorized into different classes of activity from highly active, sufficiently active, insufficiently active and inactive.

Death certificate information was collected for all-cause mortality, cardiac-related mortality and cancer-related mortality.

They also collected standard demographic information like BMI, educational status, social behaviours and the presence of certain health problems.

Results

Of the 30,162 adults aged 65 years or more in the study sample, 9.6% met the criteria of strength training at least twice weekly. Almost 32% of the sample died by 2011.

The primary analysis showed that those respondents who did strength training at least twice a week had a 45% lower odds of all-cause mortality, 19% lower odds of death from cancer and 41% lower odds of cardiac-related death.

After two adjusted models (adding in adjustments for demographic variables as well as health behaviours and presence of other health problems), the protective effect of strength training on cancer and cardiac related deaths were no longer statistically significant, but the protective effect on all-cause mortality remained statistically significant, but the protective effect decreased to a 19% decreased odds (odds ratio 0.81, up from the primary analysis odds ratio of 0.55)

My Thoughts

After reading this study, I’m reminded of other studies, such as many of the “red and/or processed meat will kill you” studies; “eggs may or may not kill you” studies; “fat will kill you” studies; “carbs will kill you” studies and any of the studies looking at health behaviours from the NHANES series of databases or the Nurses’ Health Study databases.

All of these studies hold the same things in common:

1) They are all survey-based studies.

2) They are all single-point survey-based studies. Respondents were asked at a single point in time what they were doing AT THAT TIME; not before, not after.

3) They are all cohort study designs.

4) They all use regression (some more modified than others) as the statistical method of analysis

5) They all looked at all-cause mortality.

6) The primary, non-adjusted analysis was almost always the one most publicized.

7) Adjusting for possible confounding and interacting things always resulted in a blunting of the protective or harmful effect.

Each of these components has limitations. These limitations are inherent to the study design and don’t change from one study to the next. Measuring all-cause mortality (which is basically the death rate from any cause, from a heart attack or cancer, to being killed by a falling coconut) has the inherent limitation of there possibly being no plausible connection between the risk factor of interest and a substantial portion of the causes of death in that sample. Survey-based data collection is no different than dietary recall: They’re both surveys.

This brings me to the main body of my argument. Practicing in an evidence-based framework means uniformly applying those principles to all studies, regardless of whether you personally agree with the results or not. You cannot use the argument, “Correlation does not always signify causation,” for one study and not another. You cannot criticize the inherent limitations of recall-based surveys in one study and magically believe that recall is somehow just fine in another.

This isn’t to say that you should give up lifting weights or strength training. For one, if you aren’t 65 years old or older, the results of this study don’t really apply to you (limits of generalizability). For another, you’re probably not going to stop anyways. However, large survey based cohort studies are often so limited, that their ability to bolster any practice is meager at best. Their strength lies in using really big numbers to generate questions that you might not find in smaller-scale populations. If you want evidence to show that you’re “doing the right thing”, you probably should look elsewhere.

Just because you agree with the results, doesn’t make the study design less limited. Within a design category, all studies are inherently equal. If you reject one based on its inherent design limitations, then you have to reject them all; even if some of them make you feel warm and fuzzy inside. You don’t get to turn your nose up at “Processed Meat Will Kill You” and then embrace, “Lifting Makes You Live Longer”–not without some pretty lengthy explaining anyways.